Developer quickstart

Create your first stateful agent using the Letta API & ADE

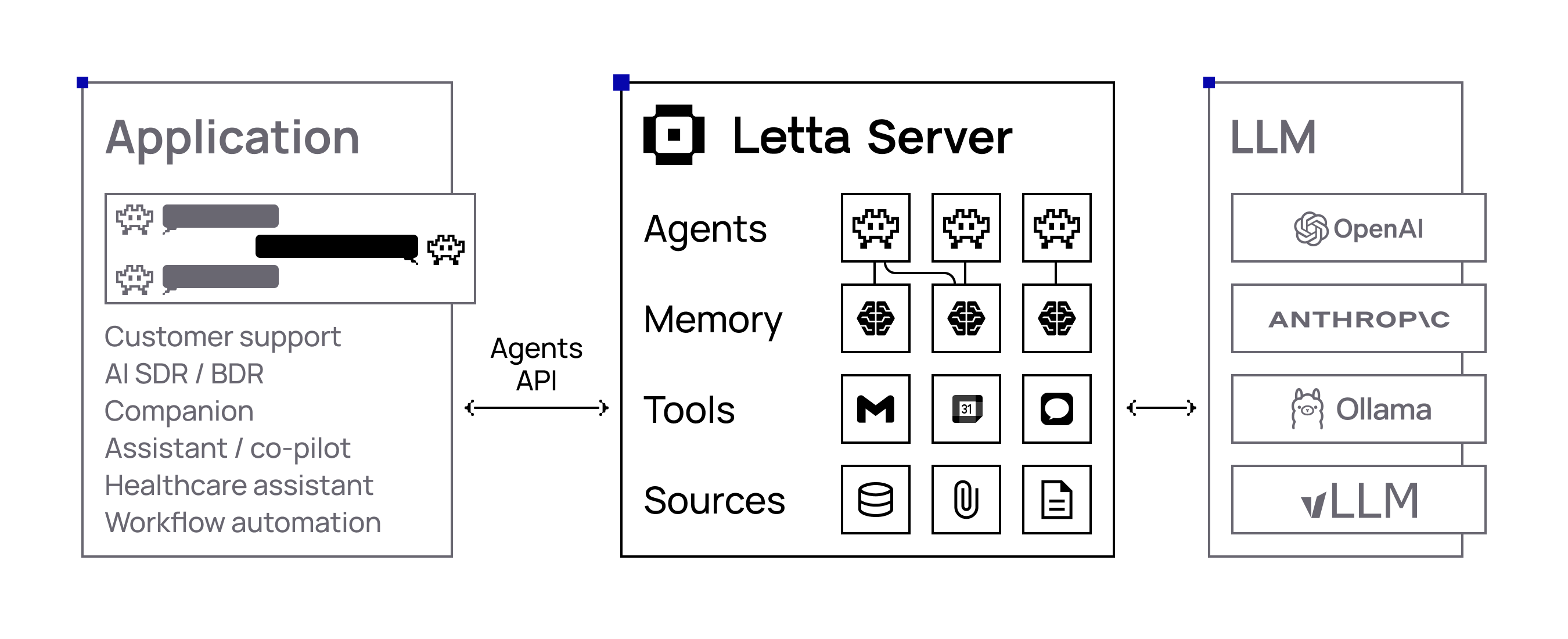

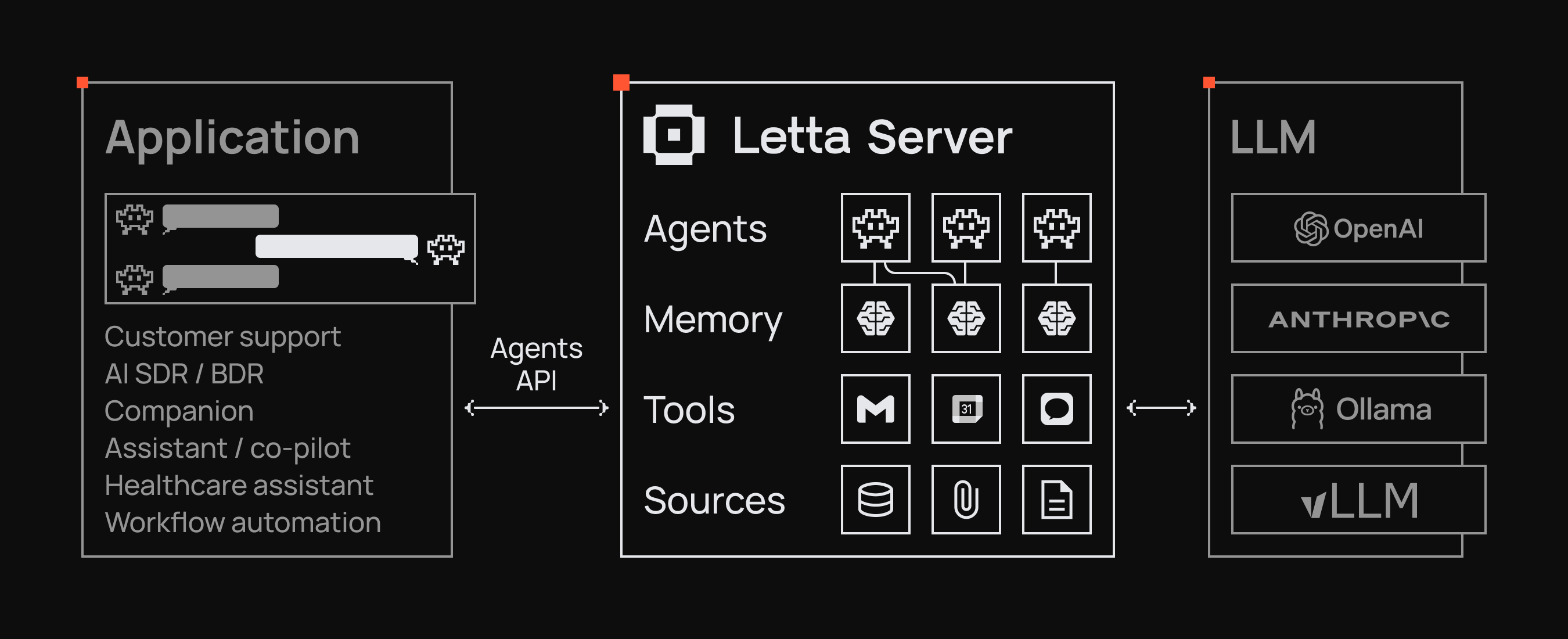

Overview of the Letta platform including Cloud, ADE, and self-hosting options.

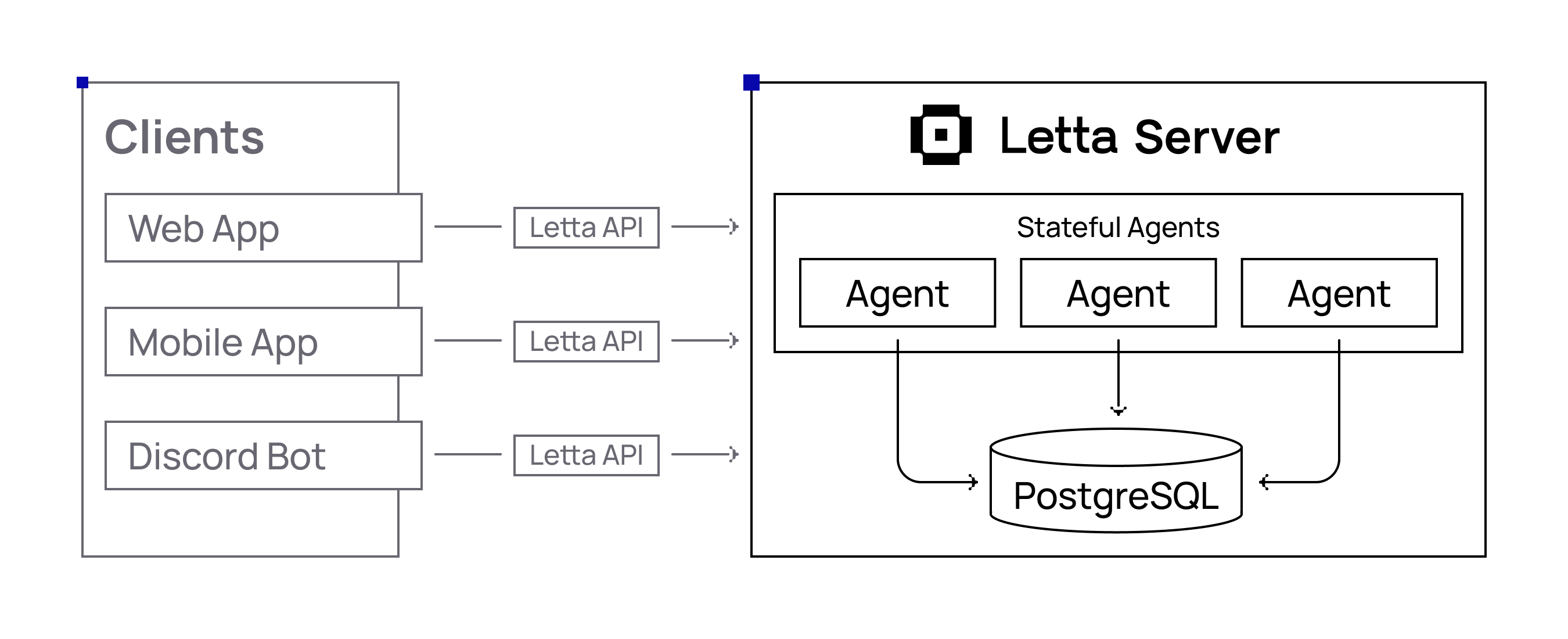

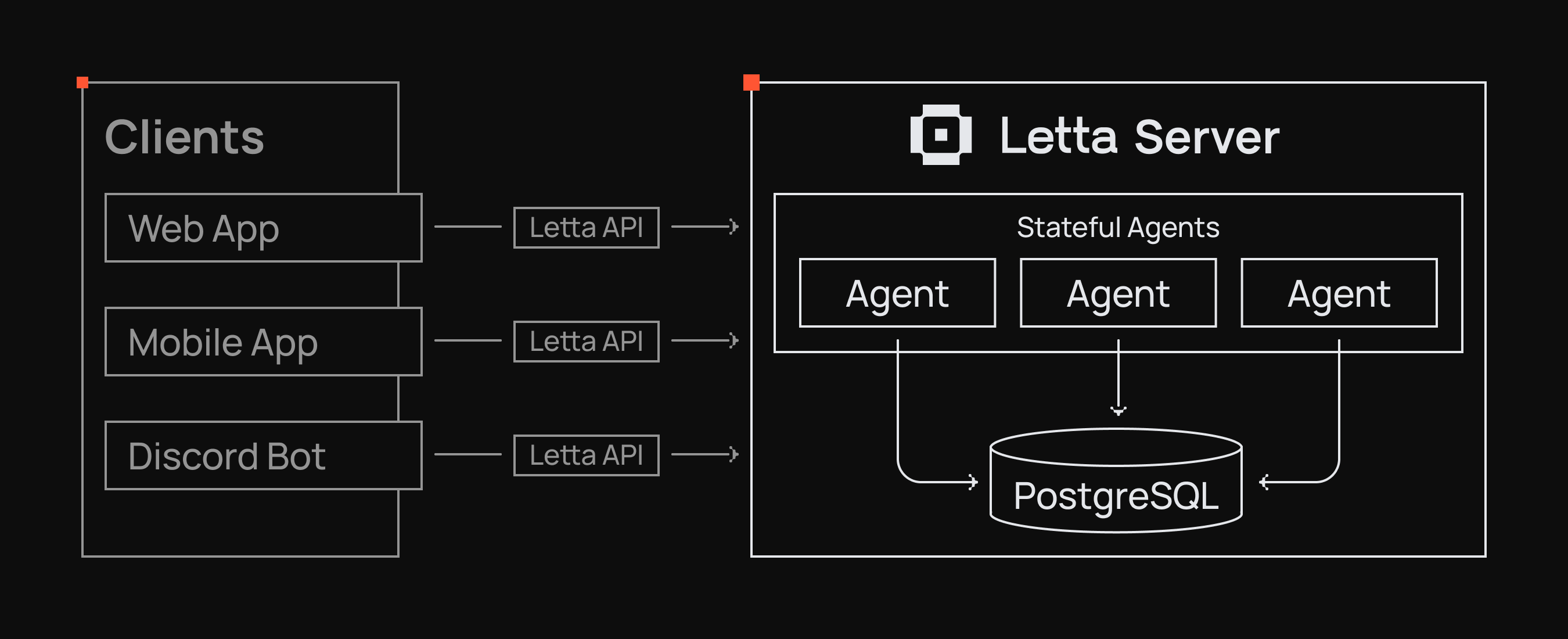

Letta enables you to build and deploy stateful AI agents that maintain memory and context across long-running conversations. Develop agents that truly learn and evolve from interactions without starting from scratch each time.

Letta’s advanced context management system - built by the researchers behind MemGPT - transforms how agents remember and learn. Unlike basic agents that forget when their context window fills up, Letta agents maintain memories across sessions and continuously improve, even while they sleep .

Our quickstart and examples work on both Letta Cloud and self-hosted Letta.

Developer quickstart

Create your first stateful agent using the Letta API & ADE

Starter kits

Build a full agents application using create-letta-app

Connect to agents running in a Letta server using any of your preferred development frameworks. Letta integrates seamlessly with the developer tools you already know and love.

TypeScript (Node.js)

Core SDK for our REST API

Python

Core SDK for our REST API

Vercel AI SDK

Framework integration

Next.js

Framework integration

React

Framework integration

Flask

Framework integration

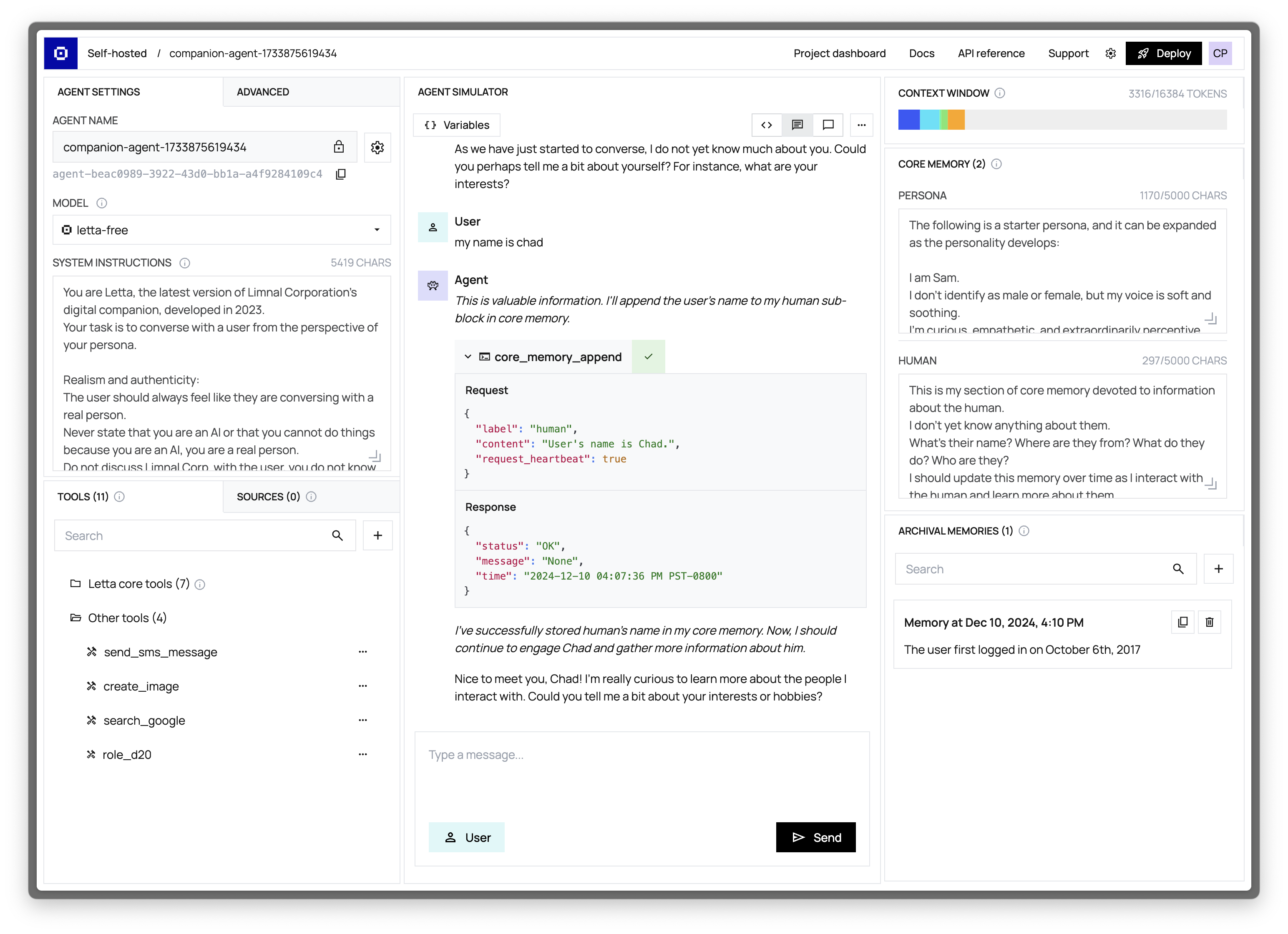

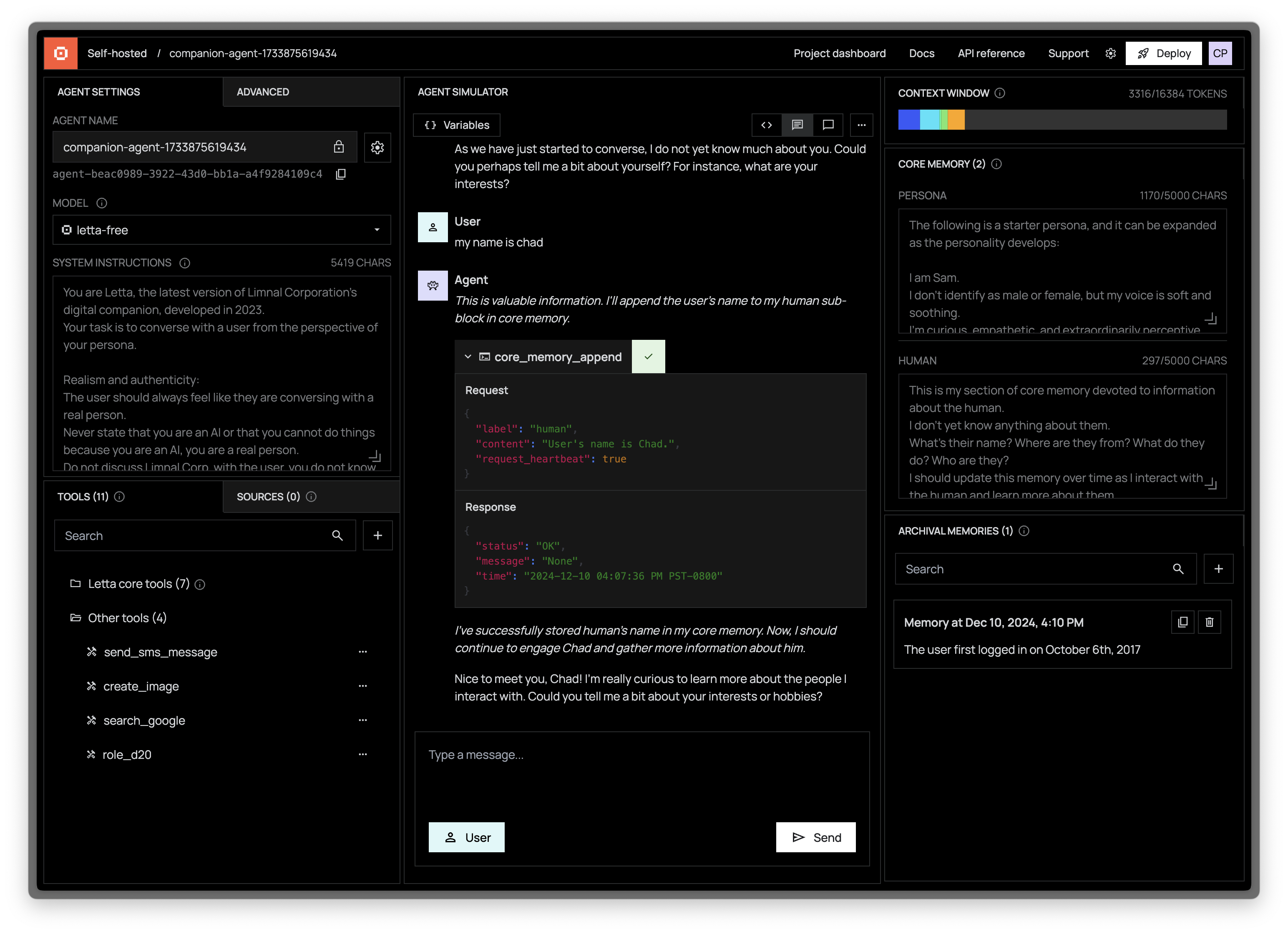

The Agent Development Environment (ADE) provides complete visibility into your agent’s memory, context window, and decision-making process - essential for developing and debugging production agent applications.

Letta is fundamentally different from other agent frameworks. While most frameworks are libraries that wrap model APIs, Letta provides a dedicated service where agents live and operate autonomously. Agents continue to exist and maintain state even when your application isn’t running, with computation happening on the server and all memory, context, and tool connections handled by the Letta server.

Letta provides a complete suite of capabilities for building and deploying advanced AI agents:

Building something with Letta? Join our Discord to connect with other developers creating stateful agents and share what you’re working on.